AWS Infrastructure part2 - ECS, and Terraform

Desinging infrastructure using AWS services and Terraform

Understanding AMI and ECS

It’s time to take things one step further, and explore the features of Terraform to learn about Infrastructure as a Code (IAC). But before we do so, let’s take a look at the components that will be the main building blocks of this tutorial: AMI and ECS.

Amazon Machine Images (AMI)

First of all, what is AMI?

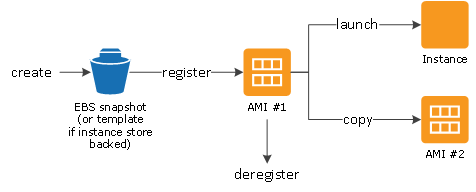

If we want to launch virtual machine instances from a template, the AMI is the blueprint configuration for the instances, in fact a backup of entire EC2 instance. When one of your machine dies in the pipeline, AMI can be used to quickly spin up and fill up the gap. During the AMI-creation process, Amazon EC2 creates snapshots of your instance’s root volume and any other Amazon Elastic Block Store (Amazon EBS) volumes attached to the instance. This allows you to replicate both an instance’s configuration and the state of all of the EBS volumes that are attached to that instance.

Packer

All the dependencies and relevant packages that needs to be installed to AMI needs constant updates. You do not want to create the AMI manually. You would want to be able to look for updates, and if there is, automatically create the images using tools like Packer or EC2 Image builder. Packer is better alternative when using Terraform, as it’s also built by Hashicorp, and can be used across different cloud providers.

ECS? Why not EKS?

We have the AMI ready by Packer. What is next? We need ECS to use the images to spin up virtual machines, and run containers within these machines to efficiently handle the jobs in the backend and deliver it back to the clients.

Let’s check what ECS is conceptually. AWS Elastic Container Service (ECS) is Amazon’s homegrown container orchestration platform. Container orchestration, that sounds awfully familar doesn’t it? Yes, Kubernetes. AWS has over 200 services, and there are quite a few of them that are related to containers. Why not use Elastic Kubernetes Service or AWS Fargate?

EKS

As we explored in the previous tutorial, Kubernetes (K8s) is a powerful tool for container orchestration, and is the most prevalent options in the DevOps community. Amazon Elastic Kubernetes Service (EKS) is Amazon’s fully managed Kubernetes-as-a-Service platform that enables you to deploy, run, and manage Kubernetes applications, containers, and workloads in the AWS public cloud or on-premise.

Using EKS, you don’t have to install, run, or configure Kubernetes yourself. You just need to set up and link worker nodes to Amazon EKS endpoints. Everything is scaled automatically based on your configuration, deploying EC2 machines and Fargate containers based on your layout.

ECS

What about ECS? ECS is a fully managed container service that enables you to focus on improving your code and service delivery instead of building, scaling, and maintaining your own Kubernetes cluster management infrastructure. Kubernetes can be very handy, but difficult to coordinate as it requires additional layers of logic. This ultimately reduces management overhead and scaling complexities, while still offering some degree of benefits in terms of container orchesteration.

ECS is simpler to use, requires less expertise and management overhead, and can be more cost-effective. EKS can provide higher availability for your service, at the expense of additional cost and complexity. Thus my conclusion is–setting things like Kubernetes control plane feature aside–if you are not dealing with a service where availability is the most crucial aspect, and the cost matters more, ECS should be a preferred option.

ECS more in depth

Okay now it makes sense why we want to go for ECS for now to get things up to speed simply with Docker container images. Let’s dig deeper into various aspects of ECS. An important concepts to understand is Cluster, Task, and Service within ECS.

Cluster

ECS runs your containers on a cluster of Amazon EC2 (Elastic Compute Cloud) virtual machine instances pre-installed with Docker. In our cases, we might be asking to use ECS GPU optimized AMI in order to run deep-learning related applications, like the Nvidia Triton Server. An Amazon ECS cluster groups together tasks, and services, and allows for shared capacity and common configurations. These statistics can be integrated and monitored closely on AWS CloudWatch.

A Cluster can run many Services. If you have multiple applications as part of your product, you may wish to put several of them on one Cluster. This makes more efficient use of the resources available and minimizes setup time.

Task

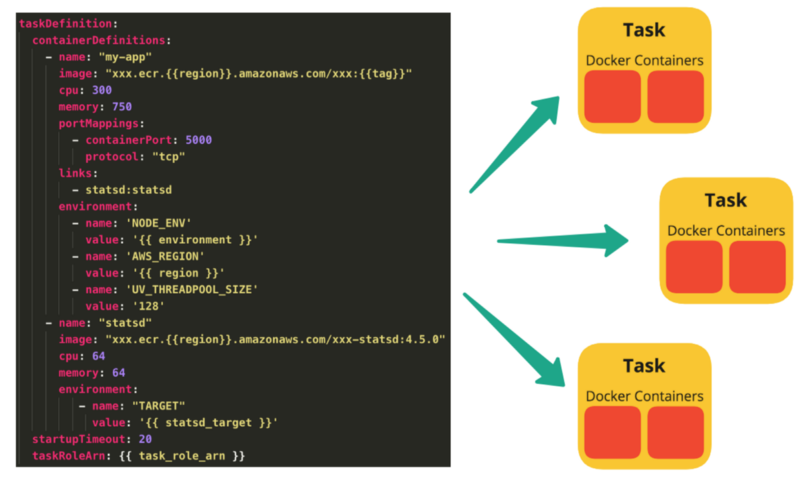

This is the blueprint describing which Docker containers to run and represents your application. In our example, it would be two containers. would detail the images to use, the CPU and memory to allocate, environment variables, ports to expose, and how the containers interact.

In Terraform, this would look like:

resource "aws_ecs_task_definition" "service" {

family = "service"

container_definitions = file("task-definitions/service.json")

volume {

name = "service-storage"

docker_volume_configuration {

scope = "shared"

autoprovision = true

driver = "local"

driver_opts = {

"type" = "nfs"

"device" = "${aws_efs_file_system.fs.dns_name}:/"

"o" = "addr=${aws_efs_file_system.fs.dns_name},rsize=1048576,wsize=1048576,hard,timeo=600,retrans=2,noresvport"

}

}

}

}

Service

A Service is used to guarantee that you always have some number of Tasks running at all times. If a Task’s container exits due to an error, or the underlying EC2 instance fails and is replaced, the ECS Service will replace the failed Task. This is why we create Clusters so that the Service has plenty of resources in terms of CPU, Memory and Network ports to use

Here is sample of ECS service defined in Terraform for MongoDB.

resource "aws_ecs_service" "mongo" {

name = "mongodb"

cluster = "${aws_ecs_cluster.foo.id}"

task_definition = "${aws_ecs_task_definition.mongo.arn}"

desired_count = 3

iam_role = "${aws_iam_role.foo.arn}"

depends_on = ["aws_iam_role_policy.foo"]

ordered_placement_strategy {

type = "binpack"

field = "cpu"

}

load_balancer {

target_group_arn = "${aws_lb_target_group.foo.arn}"

container_name = "mongo"

container_port = 8080

}

placement_constraints {

type = "memberOf"

expression = "attribute:ecs.availability-zone in [us-west-2a, us-west-2b]"

}

}

Loadbalancer

Okay, Task is our application and service ensures our Tasks are running properly. When tasks comes in, how do we know which ECS instance, or which Task should the request be allocated in order to maximize the efficiency?

Read this: https://www.pulumi.com/docs/clouds/aws/guides/elb/

Auto Scaling Group

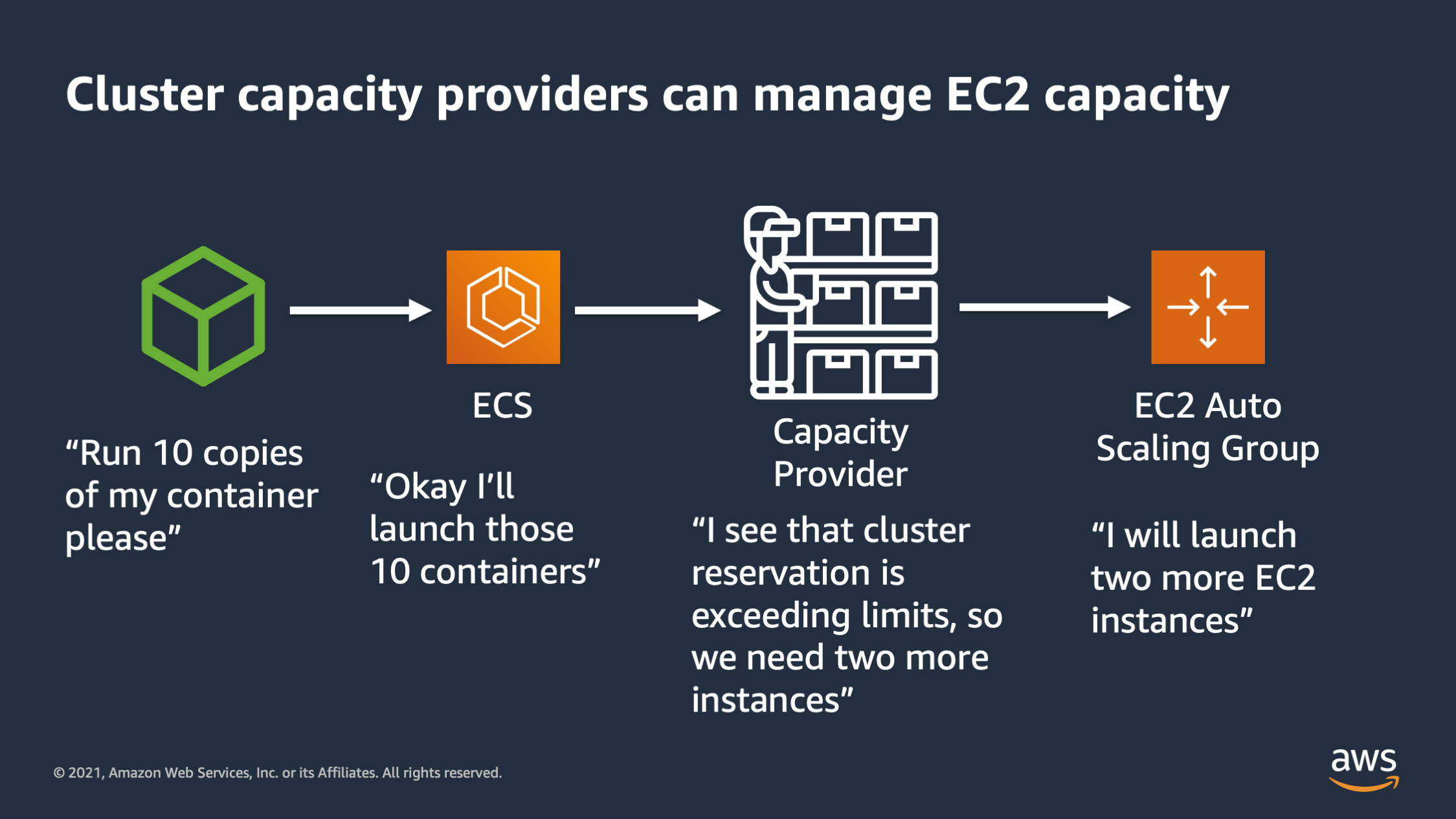

When the virtual machines are created, they are registered to the cluster. Each cluster will continuously monitor the usage, checking the overall usage. Amazon ECS can manage the scaling of Amazon EC2 instances that are registered to your cluster. This is referred to as Amazon ECS cluster auto scaling.

When you use an Auto Scaling group capacity provider with managed scaling turned on, you set a target percentage (the targetCapacity) for the utilization of the instances in this Auto Scaling group. Amazon ECS then manages the scale-in and scale-out actions of the Auto Scaling group based on the resource utilization that your tasks use from this capacity provider.